AI Can’t Debug. You’re Fired Anyway

There is a huge push at work to use AI, and to share our individual learnings.

I suspect that we are looking to use AI to layoff sections of our programming staff at the first given opportunity, despite the reassurances given by our CTO that AI “will just make us all more productive”.

So, Microsoft Research’s recent blog post introducing debug-gym, a sandboxed little theme park where AI agents can finally play with AI coding tools like setting breakpoints and printing variables interested me. It should interest and engage you too, as this is our collective future at stake.

Stop Right Now, Thank You Very Much

We still need the human touch.

Because while AI is now being trained to poke around pdb and take a tentative peek at variable values like it’s dipping its toe into the shallow end, it’s not great.

According to Microsoft,

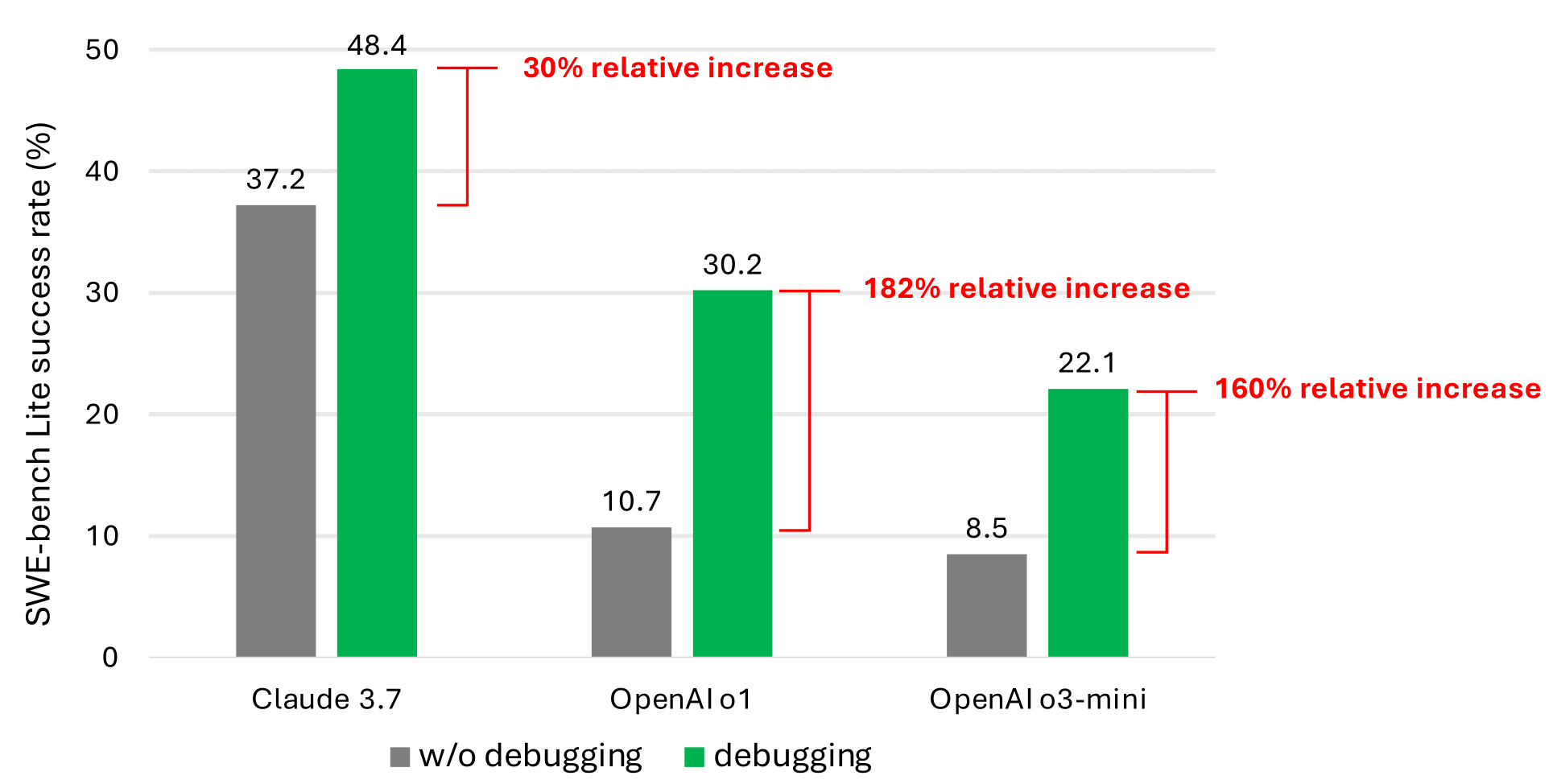

“even with debugging tools, our simple prompt-based agent rarely solves more than half of the SWE-bench Lite issues.”

That’s 50% of the bugs fixed. Not great, not terrible, unless you just laid off half your dev team and this glorified syntax stumbler is their replacement.

More Hope

We still might be able to hang on to our jobs, if we can convince leadership of the facts in this paper.

The same post says the current AI agents struggle because the lack of debugging traces and the like makes it hard for them to learn how to, you know, debug.

Which is awkward because, as the authors admit,

“most developers spend the majority of their time debugging code, not writing it”

It’s easy to write code, but difficult to debug it. AI is good at the former, but we’re all good at that.

AI, on the other hand, is like that intern who says they understand the problem, submits a fix, and breaks everything. Then you look at the PR and realize it just commented out the error.

An Unclear Future

Microsoft’s hope is that by training LLMs in debug-gym, they’ll learn to interact with code more like humans. But even the example they give, an AI getting tripped up by a mislabeled column, is the kind of bug any junior dev would solve in a five-minute chat and a coffee.

The AI? It just gives up like a dev who rage-quits the sprint on day one.

So, it looks like the plan is to fine-tune a smaller “info-seeking model” that can assist a LLM in finding the right context before suggesting a fix. Like a little debugging butler who hands Copilot the tools it needs. A digital Jeeves. An AI assistant to an AI assistant. Will it be able to check for hallucinations as well, because Claude keeps making up functions in my codebase.

My Fear

Despite all this, companies are moving to cut human developers because leadership has seen AI create a pull request and has immediately thought “game over” for devs.

Meanwhile, devs are saying (at my place) that AI is simply a glorified autocomplete that doesn’t understand scope.

Of course, the truth is somewhere in between, but I’m getting more worried about my job being cut in the short term due to a misunderstanding of the quality of AI than my coding capabilities being surpassed by AI any time soon.

I’ll let you know if I’m still employed in 6 months time.

Conclusion

So here we are, teaching machines to fumble through breakpoints while failing to train juniors to be well-rounded, competent developers.

If your AI pair can’t even handle a mislabeled column, maybe the human-free coding future is a bit further away than we thought?

So please, please, think about training junior developers. They’ll actually be here in 10 years as senior developers and be able to use breakpoints. Just a thought.